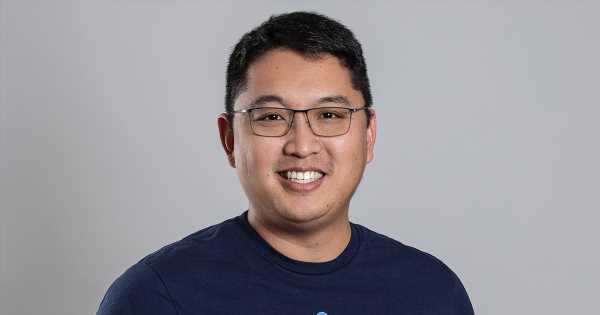

Photo: Aquant

Guardrails are a hot topic in the world of generative AI. Without much government regulation, it is falling on businesses and leaders to create a safe environment for users to interact with generative AI.

One of the largest problems cited to date are data “hallucinations,” or inaccuracies in data. These major issues are made apparent when users without adequate experience over-rely on generative AI tools.

Edwin Pahk, vice president of growth at AI-powered service company Aquant, runs his organization with a “clean data” mentality and believes generative AI has to prioritize the source’s cooperation just as much as the correctness of the answers it can produce. Correct answers and two-lane understanding of data usage are the foundation of a successful generative AI platform, he contends.

We interviewed Pahk to talk about his focus on data governance, AI tool ethics, guardrails and data validity.

Q. Please talk about your focus on a clean data mentality.

A. Algorithms are only as reliable as the data they feed upon. By fostering strong relationships with data sources and ensuring transparent data collection practices, AI models can cultivate a mutually beneficial collaboration that promotes accurate and unbiased AI outputs.

The cooperation of multiple data sources empowers AI systems to glean insights from diverse perspectives, preventing biases and skewed representations. Clean data also is about creating new, synthetic data from expert insights so you’re able to significantly enhance data quality by providing additional information and filling in gaps in the existing dataset.

Q. Let’s discuss AI tool ethics. You say AI tools need to be trained to only gather data from sources that have agreed to it. How can companies do this?

A. To train AI models with data only from consenting sources, the primary approach involves implementing rigorous data collection practices and obtaining explicit consent. This process typically includes the following steps:

First, clearly communicate the purpose and scope of data collection, providing transparency about how the data will be used.

Second, seek informed consent from individuals or organizations, ensuring they understand the implications and are willing to share their data for the specified purposes.

Third, establish robust data management protocols to ensure data is handled securely and in compliance with privacy regulations.

Fourth, regularly review and update consent agreements, allowing individuals to withdraw their consent if desired. By adhering to these practices, AI models can be trained using data exclusively obtained from sources that have provided explicit consent, ensuring ethical and legal compliance in the data gathering process.

Q. Guardrails are a big topic in AI. How can organizations set up these guardrails without disrupting the effectiveness of the AI tool itself or inundating employees with safety processes?

A. To establish guardrails in an AI model without disrupting effectiveness or overwhelming employees, organizations must design AI systems from the ground up that are inherently aligned with human values and exhibit safe and ethical behavior. Achieving a fully self-governed AI system is a complex task but it is possible.

The most critical aspect of this is to undergo thorough testing of data integrity. This involves ensuring the accuracy, completeness and consistency of the data used in the deployment. By rigorously addressing data integrity through QA processes, you’re ensuring the models are built on accurate and consistent data, leading to more reliable predictions and insights for end-users.

Q. Data validity is another key in AI. Please explain how using verified and valid data can help mitigate some risks associated with generative AI tools.

A. Using verified and valid data can help mitigate risks associated with generative AI tools by ensuring the accuracy and reliability of the output.

By leveraging trustworthy data sources, organizations can reduce the chances of generating biased, misleading or harmful content, thereby enhancing the overall integrity and safety of the AI-generated outputs.

Q. With all this in mind, what must CIOs and other health IT leaders at healthcare provider organizations be focusing on as generative AI tools continue to grow in importance.

A. CIOs and other health IT leaders must focus on several key areas whether they intend to build an AI system in house or partner with a vendor.

First, they need to ensure robust data governance practices to maintain the quality, privacy and security of the data used by these tools. Additionally, they should prioritize ethical considerations, such as transparency, explainability and fairness, to build trust in the AI outputs.

Moreover, fostering collaboration between all stakeholders – clinicians, data scientists and IT teams – is crucial for effectively integrating generative AI tools into clinical workflows while aligning with regulatory requirements and patient safety standards.

Follow Bill’s HIT coverage on LinkedIn: Bill Siwicki

Email him: [email protected]

Healthcare IT News is a HIMSS Media publication.

Source: Read Full Article